Artificial Intelligence (AI) is reframing how we plan, learn, and document in healthcare, offering unprecedented opportunities for personalisation and efficiency. However, for Executives, Board members, and operational leaders alike, the rapid adoption of Generative AI (G-AI) presents a distinct challenge: how do we harness this innovation without compromising the safety and integrity that are the bedrock of the health sector?

Good governance has always underpinned Ausmed's commitment to safe, ethical, and accountable delivery of health workforce capability-building products and software services. We recognise the important interdependencies that exist between good governance and trust; both have the safety and interests of the public at their core.

At Ausmed, our commitment to governance does not slow us down; it creates the safe parameters that allow for responsiveness, learning, disruption, and innovation.

Modelling transparency, one of Ausmed's TRUSTED governance principles, this explainer will walk you through the governance mechanisms that ensure our G-AI enabled capability-building products remain safe, reliable, and trustworthy. Our goal, whether you sit on the Board or manage daily education operations, is to support you as you navigate your own AI governance adoption journey.

The Core Challenge: Plausibility vs. Truth

To understand why governance is vital, we must first understand the nature of Generative AI. Models like ChatGPT are designed to predict plausibility, not truth. In a creative writing context, this is a feature; in healthcare education, it is a risk.

Without strict oversight, AI can produce "hallucinations"—content that sounds authoritative and confident but is factually incorrect or fabricated. In clinical education, a hallucinated medication dosage or incorrect protocol is not just an error; it is a safety risk. Furthermore, without careful management, AI can perpetuate bias found in training data, potentially reinforcing harmful stereotypes or undermining cultural safety.

Governance by Design: The TRUSTED Principles

Ausmed creates regulated, safety-sensitive educational content. Therefore, we do not treat governance as an afterthought or a compliance checkbox. We adopt a "Governance by Design" framework, meaning safeguards are embedded from the very start of the system or product development lifecycle.

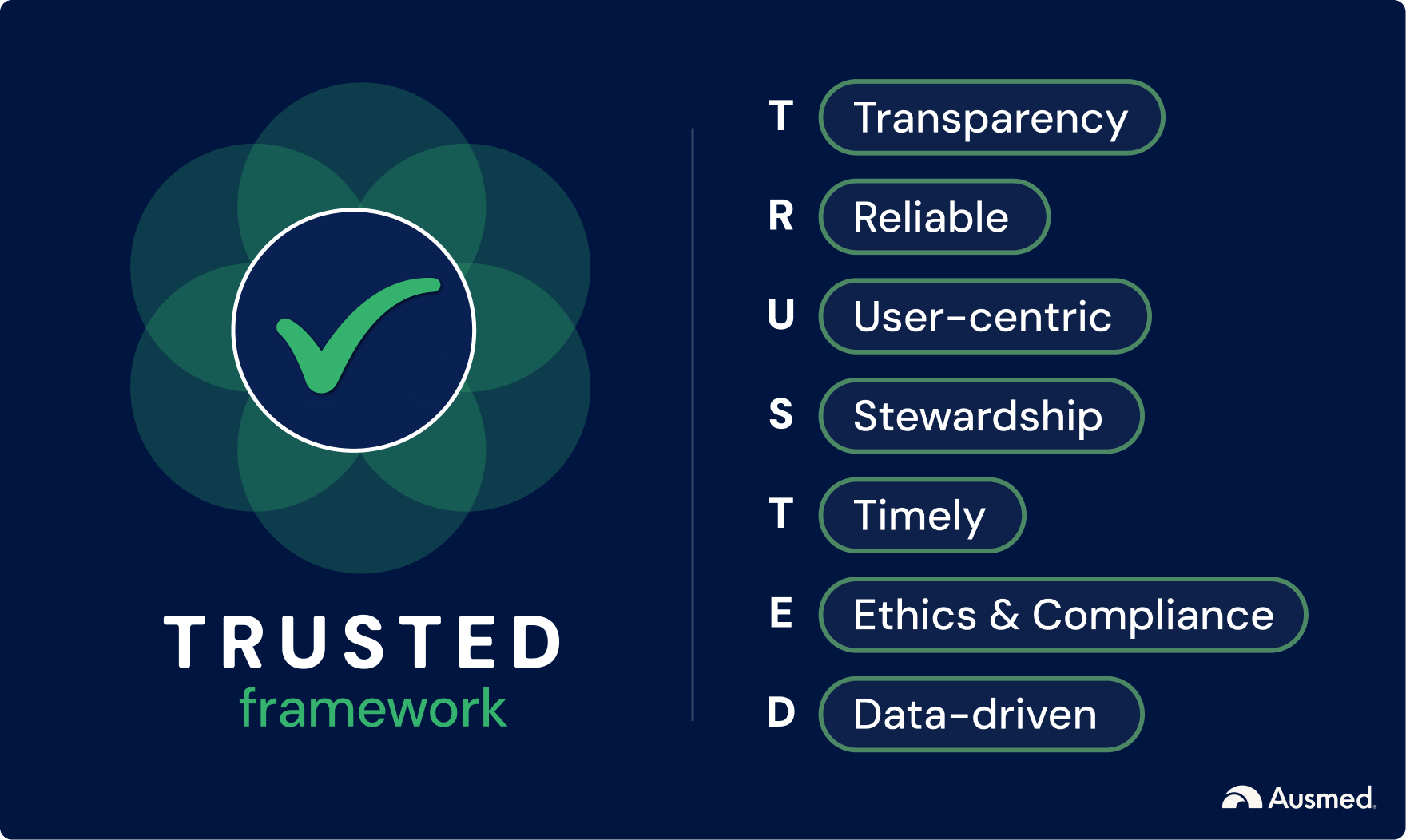

To demystify how we govern AI, we have codified our values into the TRUSTED Governance Principles. These principles serve as the guardrails for our technology, aligned directly with Australia's Voluntary AI Safety Standards and AI Ethics Principles.

- T – Transparency: We are open about when and how AI is used. We believe that leaders and learners alike must be informed when engaging with AI-generated content to maintain scrutiny and accountability.

- R – Reliability: In healthcare, systems must work consistently and safely. We rigorously test our AI models to ensure they perform correctly across varied inputs, prioritising clinical accuracy above speed.

- U – User-centric: Our governance is designed around the diverse needs of our learners. We actively seek feedback to ensure our tools are accessible, inclusive, and culturally safe for all health professionals.

- S – Stewardship: This is critical for Board and Executive levels. Stewardship means taking accountability for the long-term interests of the public and the profession. We ensure meaningful human oversight is always present.

- T – Timely: We operate with agile governance structures that allow us to respond rapidly to emerging risks, ensuring that safety mechanisms evolve as fast as the technology does.

- E – Ethics Compliance: Beyond legal obligations, we ensure our AI use is morally sound. This includes rigorous checks for bias, fairness, and the protection of privacy and intellectual property.

- D – Data-Driven: Effective governance requires evidence. We use high-quality data to monitor the performance of our AI systems, ensuring decisions are based on measurable metrics rather than assumptions.

Bridging Strategy to Operations: The Quality Management System

For those in the operational space—the education managers and clinical educators—governance translates into a tangible Quality Management System (QMS). This is where high-level principles become day-to-day practice.

To understand how our QMS works, think of a professional kitchen:

Quality Assurance (The Recipe): Proactive Design

Before any cooking begins, you need a safe, tested recipe. In AI terms, this is Quality Assurance. It involves proactively selecting the right AI models, designing high-quality prompts, and embedding safeguards before a tool is ever built.

Quality Control (The Tasting): Reactive Checks

Before a dish leaves the kitchen, the chef tastes it. In our system, this is Quality Control. It involves systematic checks of AI outputs for hallucinations, bias, and safety issues. This relies heavily on Human-in-the-Loop (HITL) reviews, where clinical Subject Matter Experts (SMEs) actively validate content and model behaviour to ensure it aligns with professional standards.

Quality Improvement (Refining the Menu): Iterative Learning

Finally, a great kitchen refines its menu based on diner feedback. This is Quality Improvement. We use learner feedback, performance audits, and continuous monitoring to make iterative updates to our systems.

A Risk-Based Approach: Prevention over Cure

Consistent with Australian Government recommendations, Ausmed adopts a risk-based approach. Rather than waiting for errors to occur, we focus on preventative strategies that target risks before a product reaches the customer.

This approach relies on rigorous Evaluations (Evals) and Stress Testing. Before we deploy an AI tool, it undergoes evaluation—structured tests known as "evals" designed to assess performance, factual accuracy, and safety. We "stress test" our systems to try and force errors, allowing us to identify flaws and mitigate hallucinations before they can impact a learner.

Stewardship in Action: Choosing the Right Partner

As healthcare managers and educators, you are stewards of your workforce's capability. We appreciate the weight of this responsibility. In an era of rapid technological change, choosing an education partner is no longer just about content library size; it is a governance decision.

I am also mindful that most of you reading this paper may already partner with Ausmed; you trust us. Being your trusted partner underpins our commitment to governance and assuring our operations are benchmarked against the highest standards of safety and reliability, including ISO 9001:2015 certification.

I hope this walk-through of our AI governance framework assures you that while we may move fast to bring you the latest innovation, we never compromise on the quality or safety that your workforce deserves and the sector demands.

Reflective Prompt for Action

As you plan your workforce capability strategy, consider who you rely on to deliver safe education. Does your current approach to vendor selection scrutinise their AI governance? Are they providing the transparent, human-verified safety that your clinical workforce requires?

We invite you to use Ausmed's TRUSTED framework and Quality Management System as a benchmark.

References

This reference list includes all primary sources that have informed the development of Ausmed's Generative AI Governance Framework. These sources include national and international standards, regulatory guidance, research frameworks, and government-issued ethical principles.

- Department of Industry, Science and Resources, Australia. Voluntary AI Safety Standard. 2024. Available from: https://www.industry.gov.au/publications/voluntary-ai-safety-standard

- Department of Finance, Australia. Implementing Australia's AI Ethics Principles in Government. 2024.

- Department of Health, Disability and Training, Australia. Safe and Responsible Artificial Intelligence in Health Care – Legislation and Regulation Review: Final Report. 2025.

- Office of the Australian Information Commissioner (OAIC). Developing and Training Generative AI Models: Privacy Guidance. 2023.

- Office of the Australian Information Commissioner. Checklist: Privacy Considerations for Training AI Models. 2024.

- CSIRO. Diversity and Inclusion in AI Guidelines. 2022.

- Department of the Premier and Cabinet, Government of South Australia. Guideline for the Use of Generative Artificial Intelligence and Large Language Model Tools (DPC/G13.1). 2024.

- ISO/IEC 42001:2023. Artificial Intelligence Management Systems – Requirements. International Organization for Standardization.

- NIST AI Risk Management Framework (RMF) 1.0. National Institute of Standards and Technology, USA. 2023.

- ISO 9001:2015. Quality management systems – Requirements. International Organization for Standardization.

- ISO/IEC 27001:2022. Information security, cybersecurity and privacy protection – Information security management systems – Requirements.

- Council of Deans of Nursing and Midwifery (CDNM). Position Statement on the use of Artificial Intelligence in Nursing and Midwifery Education, Research and Practice. CDNM; 2025 Oct.